Business Intelligence Tools to Merge Duplicate Records: A Deep Dive

In the data-driven landscape of modern business, the ability to effectively manage and analyze information is paramount. Data, however, is rarely pristine. It often arrives in various formats, from disparate sources, and with inherent inconsistencies. One of the most common challenges is the presence of duplicate records. These records can skew analysis, lead to incorrect conclusions, and ultimately, hinder decision-making. Fortunately, business intelligence tools offer powerful solutions. These tools provide capabilities to merge duplicate records, ensuring data accuracy and integrity. This article explores the critical role of business intelligence tools in tackling this challenge, providing insights into their functionalities, benefits, and selection criteria.

The Perils of Duplicate Records

Duplicate records are a ubiquitous problem across industries. They arise from various sources. These include data entry errors, system integration issues, and data migration processes. Imagine a scenario where a customer’s information exists multiple times in a CRM system. Each record might contain slightly different details. This can lead to several detrimental consequences:

- Inaccurate Reporting: Key performance indicators (KPIs) will be distorted. This affects sales figures, customer counts, and other critical metrics.

- Inefficient Marketing: Marketing campaigns may target the same customer multiple times. This can lead to customer annoyance and wasted resources.

- Poor Customer Service: Support representatives may not have a complete view of a customer’s history. This hinders their ability to provide effective solutions.

- Compliance Risks: Duplicate records can complicate compliance with data privacy regulations like GDPR and CCPA.

Addressing duplicate records is not merely about data hygiene. It is about ensuring the reliability of the entire business intelligence ecosystem. Clean, accurate data forms the foundation for informed decision-making. Without it, organizations operate on a shaky foundation.

How Business Intelligence Tools Address Duplicates

Business intelligence tools offer a suite of functionalities to identify and merge duplicate records. These tools employ sophisticated algorithms and techniques to achieve this. They go beyond simple keyword matching. They analyze various data points to determine record similarity. Here’s a breakdown of the key features:

- Data Profiling: Tools allow users to examine data quality and identify potential issues. They provide insights into the frequency of duplicates and the characteristics of the data.

- Data Cleansing: This involves standardizing data formats, correcting inconsistencies, and filling in missing values. Data cleansing prepares the data for duplicate detection.

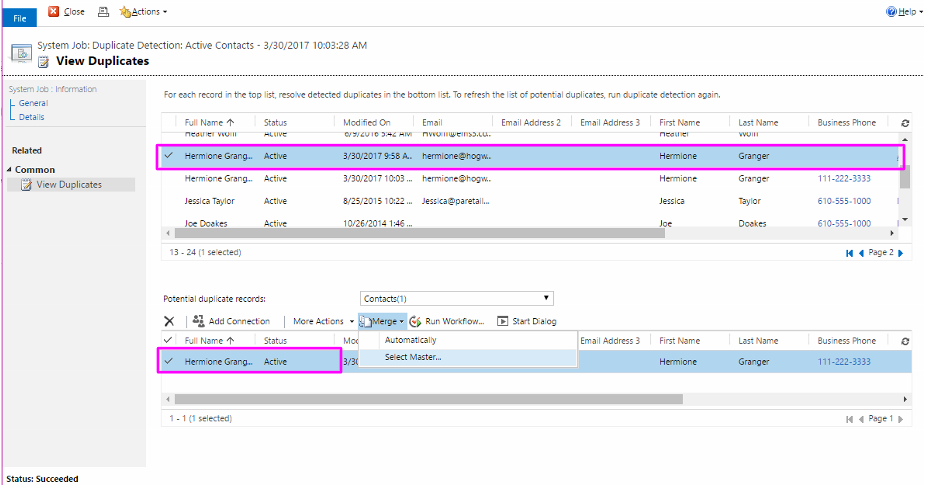

- Duplicate Detection: Advanced algorithms compare records based on various criteria. These include name, address, phone number, email, and other relevant fields. The tools assign a similarity score to each pair of records.

- Record Merging: Based on the similarity scores, tools suggest or automatically merge duplicate records. Users can define rules for selecting the master record. They choose which fields to retain.

- Data Transformation: Some tools offer the ability to transform data during the merging process. This includes data standardization and consistency checks.

- Audit Trails: Many business intelligence tools provide audit trails. These track all changes made to the data. This ensures data governance and accountability.

The specific features and capabilities vary from tool to tool. However, the core objective remains the same. It is to streamline the process of identifying and merging duplicate records. This improves data quality and enhances the reliability of business insights.

Benefits of Using Business Intelligence Tools for Duplicate Record Merging

Implementing business intelligence tools to merge duplicate records yields significant benefits. These benefits extend beyond improved data quality. They contribute to overall business performance. Here are some key advantages:

- Improved Data Accuracy: Removing duplicates ensures that reports and analyses are based on reliable data. This leads to more accurate insights and better decision-making.

- Enhanced Operational Efficiency: Streamlining data management processes reduces manual effort. This frees up resources for other critical tasks.

- Increased Customer Satisfaction: Accurate customer data enables personalized interactions. This leads to improved customer service and increased satisfaction.

- Reduced Marketing Costs: Eliminating duplicate contacts prevents wasted marketing spend. It also improves the effectiveness of marketing campaigns.

- Better Compliance: Maintaining clean data makes it easier to comply with data privacy regulations. It mitigates the risk of penalties and legal issues.

- Enhanced Data-Driven Decision Making: With clean data, organizations can make more informed decisions. They can also identify trends and opportunities more effectively.

The return on investment (ROI) from using business intelligence tools for duplicate record merging can be substantial. It is especially significant for organizations that rely heavily on data for their operations. This includes businesses in retail, finance, healthcare, and e-commerce.

Selecting the Right Business Intelligence Tool

Choosing the appropriate business intelligence tool depends on several factors. These factors include the size of the organization, the complexity of the data, and the specific requirements. Consider the following when evaluating different options:

- Data Volume and Complexity: Assess the volume of data that needs to be processed. Also, consider the complexity of the data structures. Choose a tool that can handle the scale and complexity.

- Duplicate Detection Algorithms: Evaluate the sophistication of the tool’s duplicate detection algorithms. Look for tools that use advanced matching techniques. These include fuzzy matching and phonetic algorithms.

- Data Integration Capabilities: The tool should integrate seamlessly with existing data sources. These sources may include databases, CRM systems, and spreadsheets.

- Data Cleansing Features: Ensure the tool offers robust data cleansing capabilities. This includes data standardization, validation, and transformation.

- User Interface and Ease of Use: The tool’s user interface should be intuitive and easy to navigate. This ensures that users can quickly learn and utilize the tool.

- Reporting and Analytics: The tool should provide reporting and analytics capabilities. This allows users to monitor data quality and track the effectiveness of duplicate merging efforts.

- Scalability and Performance: The tool should be able to handle future data growth. It should also maintain optimal performance as data volumes increase.

- Cost and Budget: Consider the total cost of ownership, including licensing fees, implementation costs, and ongoing maintenance.

Conduct thorough research and compare different business intelligence tools. Evaluate their features, pricing, and user reviews. Consider a trial period to assess the tool’s suitability for the specific needs of the organization.

Implementing a Successful Duplicate Record Merging Strategy

Simply acquiring a business intelligence tool is not enough. A successful duplicate record merging strategy requires careful planning and execution. Follow these best practices:

- Define Clear Objectives: Clearly define the goals of the duplicate record merging project. This includes identifying the key data sources and the desired outcomes.

- Establish Data Governance Policies: Implement data governance policies to ensure data quality and consistency. This includes defining data standards and data ownership.

- Prioritize Data Sources: Focus on cleaning the most critical data sources first. These sources have the greatest impact on business operations.

- Develop a Data Cleansing Plan: Create a detailed plan for data cleansing. This should include data standardization, validation, and transformation steps.

- Test and Validate: Thoroughly test the duplicate detection and merging processes. Validate the results to ensure accuracy and completeness.

- Monitor and Maintain: Continuously monitor data quality. Regularly update the duplicate merging rules. This ensures that the data remains clean over time.

- Train Users: Provide adequate training to users on how to use the tool. This ensures that they can effectively utilize its features.

A well-executed strategy minimizes disruptions. It maximizes the benefits of using business intelligence tools to merge duplicate records. This includes ongoing monitoring and refinement of the process.

Future Trends in Business Intelligence and Duplicate Record Management

The field of business intelligence is constantly evolving. Advances in technology are impacting how organizations manage their data. Several trends are shaping the future of duplicate record management:

- Artificial Intelligence (AI) and Machine Learning (ML): AI and ML algorithms are becoming increasingly sophisticated. They automate duplicate detection and merging processes. They also improve the accuracy of data matching.

- Cloud-Based Solutions: Cloud-based business intelligence tools are gaining popularity. They offer scalability, flexibility, and cost-effectiveness. They also provide easier data access and collaboration.

- Data Quality Automation: Automation is playing a larger role in data quality. Automated data cleansing, validation, and monitoring are becoming more common.

- Data Governance and Compliance: Data governance and compliance are becoming increasingly important. Tools are evolving to support these requirements. They also ensure data privacy and security.

- Integration with Data Lakes and Data Warehouses: Tools are integrating with data lakes and data warehouses. This allows for centralized data management and analysis.

Organizations that embrace these trends will be better positioned to manage their data effectively. They will improve their ability to make data-driven decisions.

Conclusion

Business intelligence tools are essential for tackling the challenge of duplicate records. They offer a comprehensive suite of functionalities to identify, merge, and manage duplicate data. By leveraging these tools, organizations can significantly improve data accuracy, enhance operational efficiency, and drive better decision-making. Selecting the right tool, implementing a well-defined strategy, and staying abreast of future trends are critical for success. The benefits of clean, accurate data are undeniable. They are crucial for organizations seeking to thrive in a data-driven world. The careful selection and implementation of business intelligence tools are key to unlocking these benefits. This ensures data integrity and drives business success. [See also: Data Quality Best Practices] [See also: CRM Data Management Tips] [See also: Data Governance Frameworks]